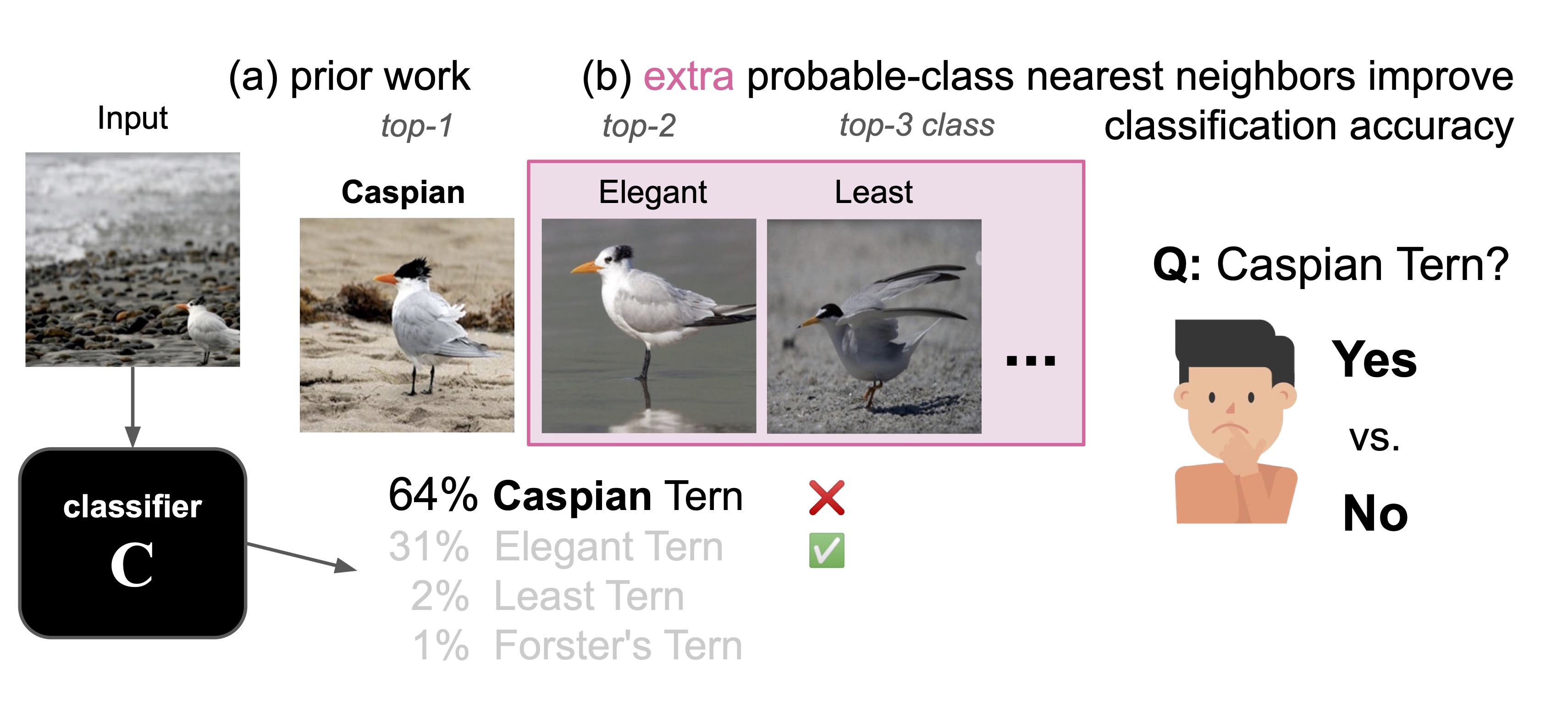

We present a new class of nearest-neighbor explanations (called Probable-Class Nearest Neighbors or PCNN) and show a novel utility of the XAI method: To improve predictions of a frozen, pretrained classifier \( C \). Our method consistently improves fine-grained image classification accuracy on CUB-200, Cars-196, and Dogs-120. Also, a human study finds that showing lay-users our PCNN explanations improves their decision accuracy over showing only the top-1 class examples (as in prior work).

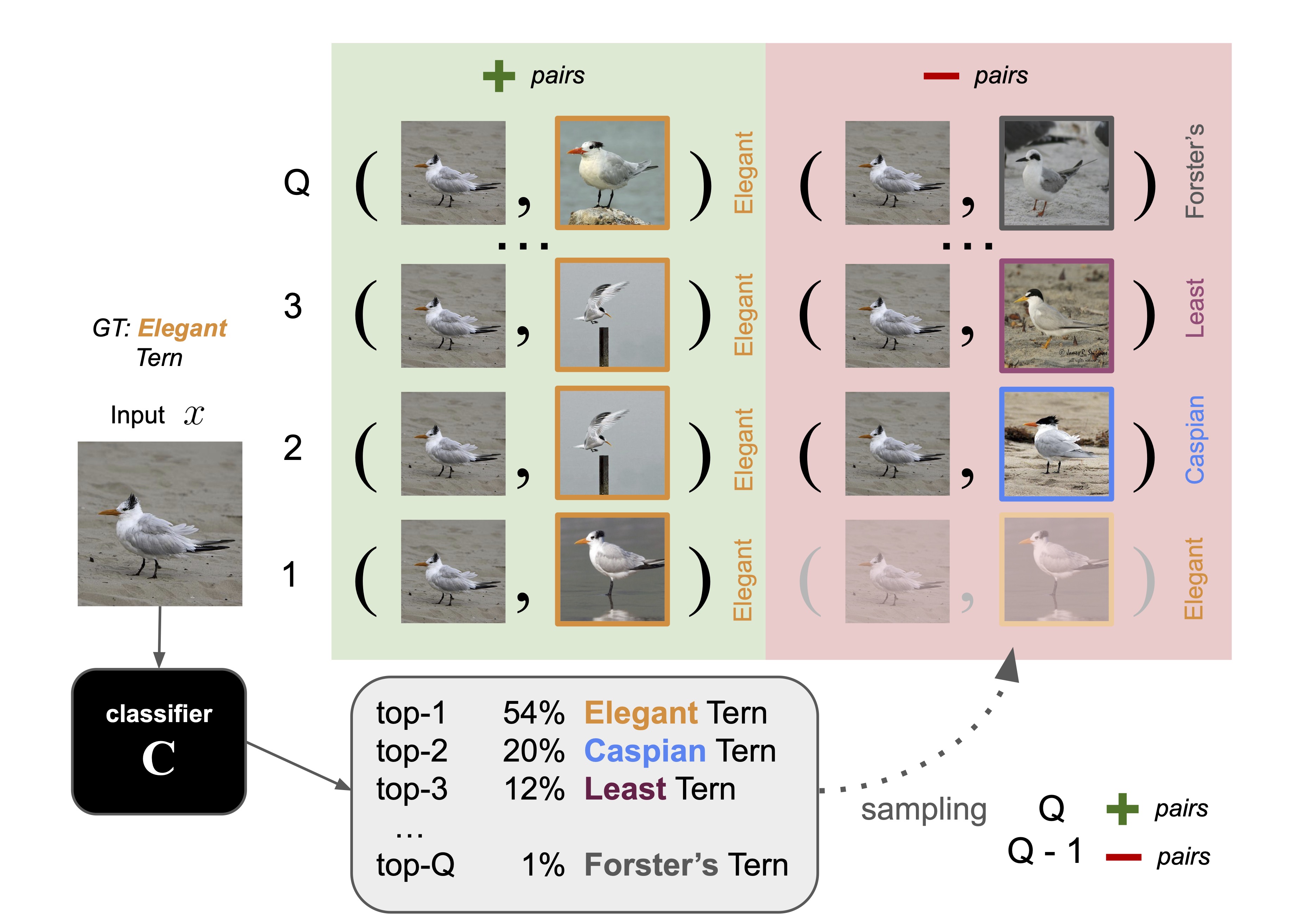

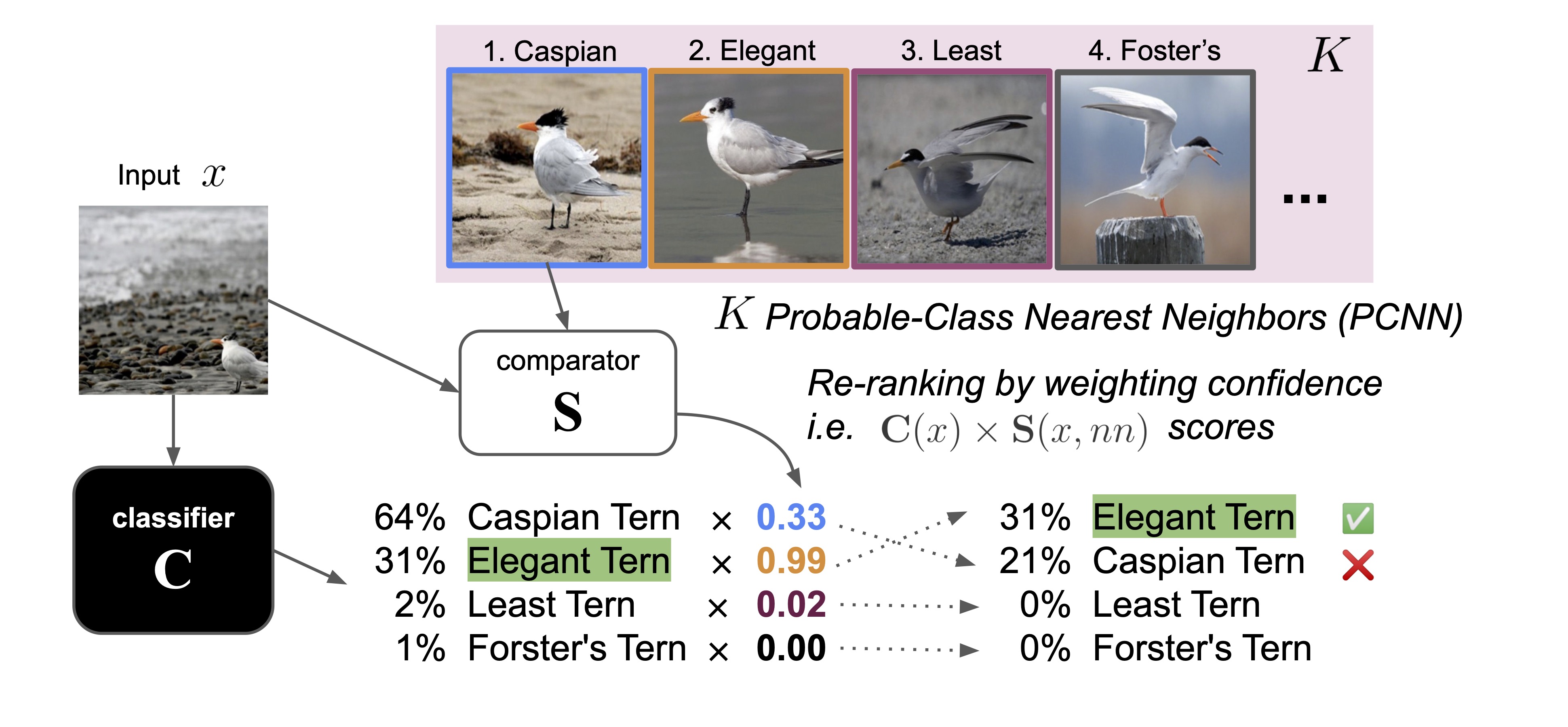

We train an image comparator network, denoted as \( \mathbf{S} \), to determine whether two images belong to the same class by assessing their similarity \( \mathbf{S}(x, nn) \). \( \mathbf{S} \) will be used later for re-ranking the top-\( K \) predicted classes by the pretrained classifier \( \mathbf{C} \).

PCNN-based sampling: For each training-set image \( x \), we sample nearest-neighbor images from the groundtruth class of \( x \) to form positive pairs. To sample hard, negative pairs: Per non-groundtruth class among the top-predicted (most probable) classes from \( \mathbf{C}(x) \), we take the nearest image to the input. We then use such pairs to train an image comparator \( \mathbf{S} \).

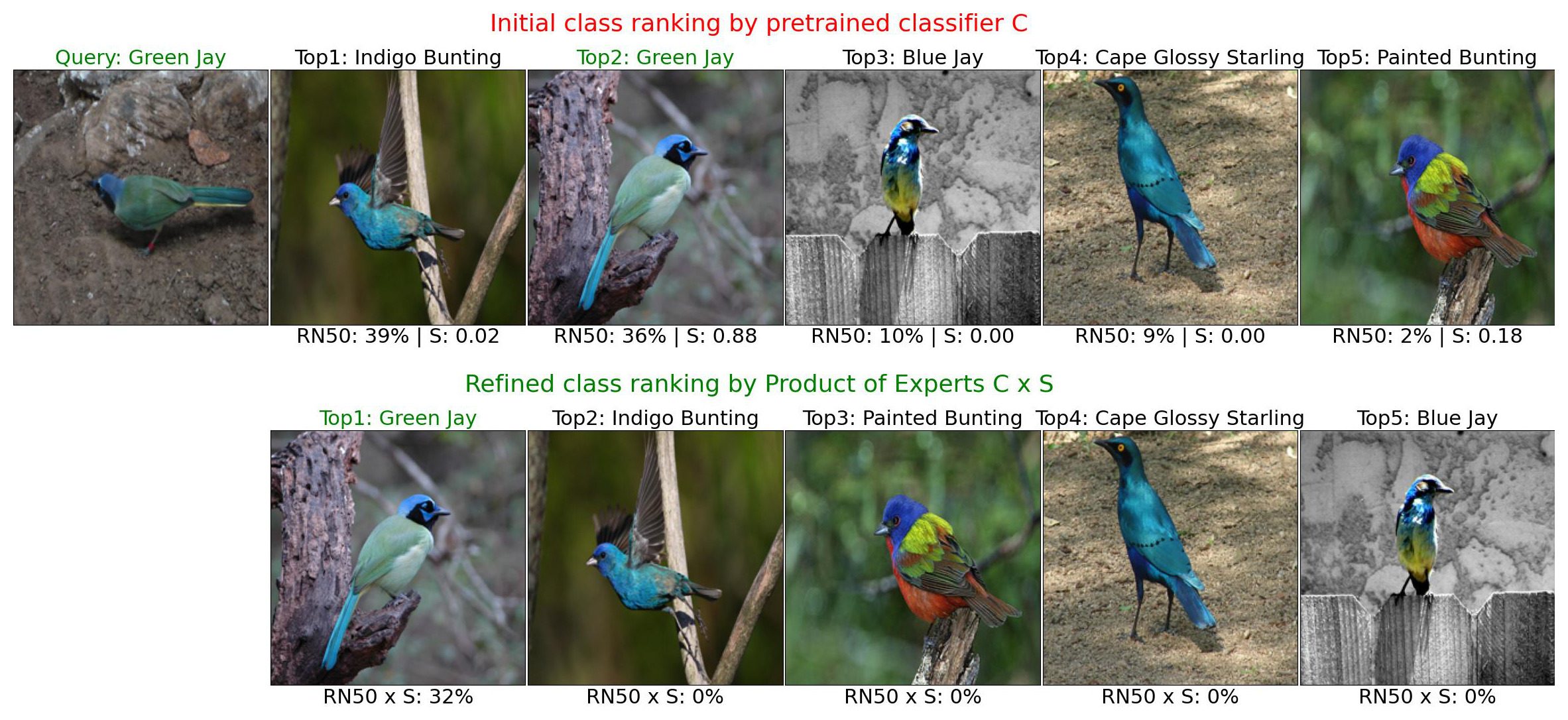

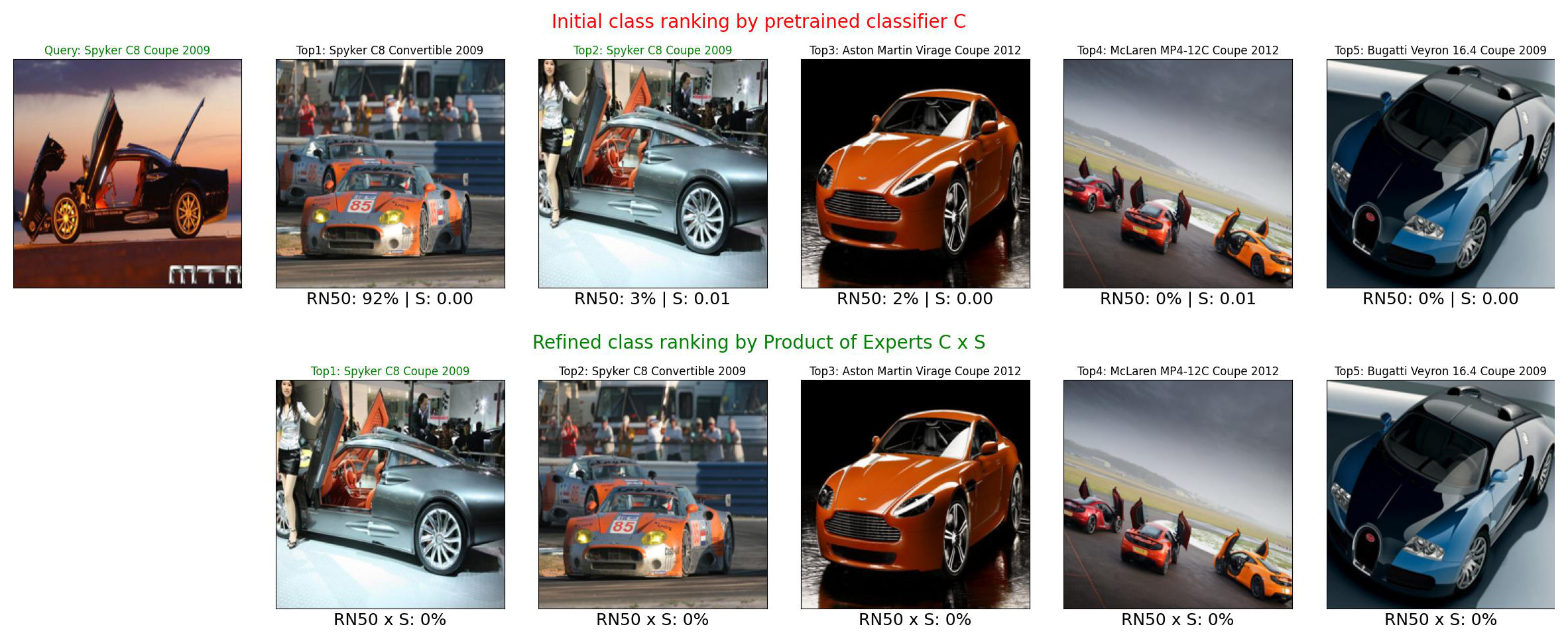

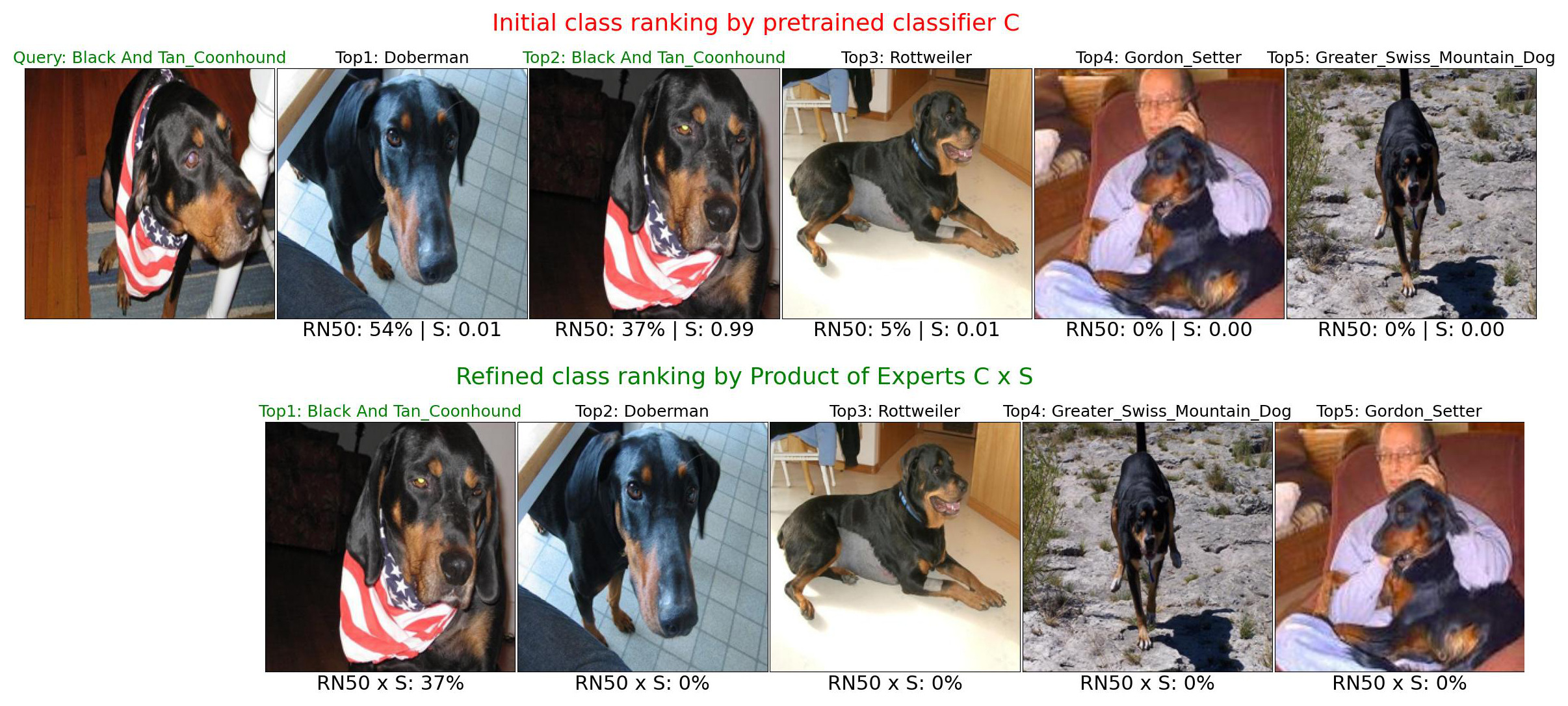

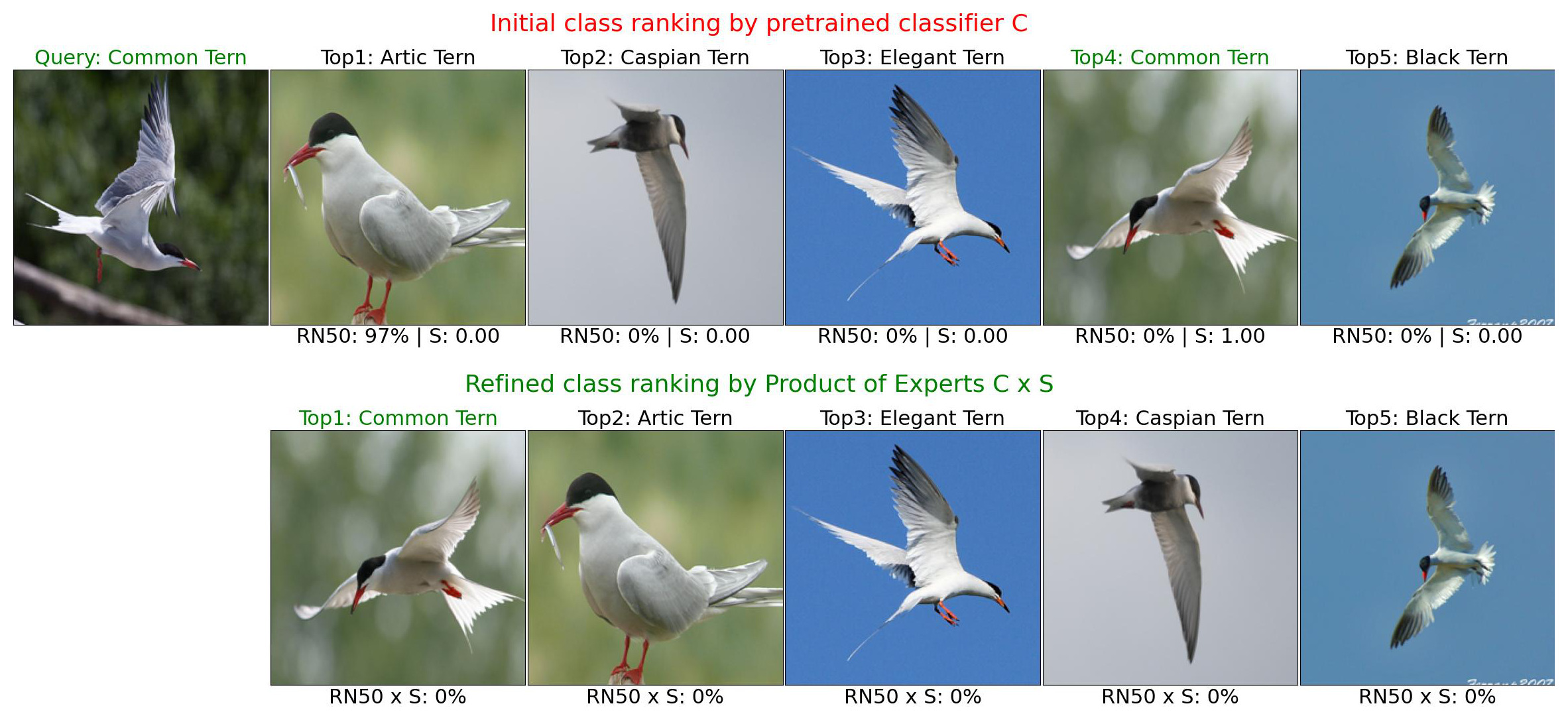

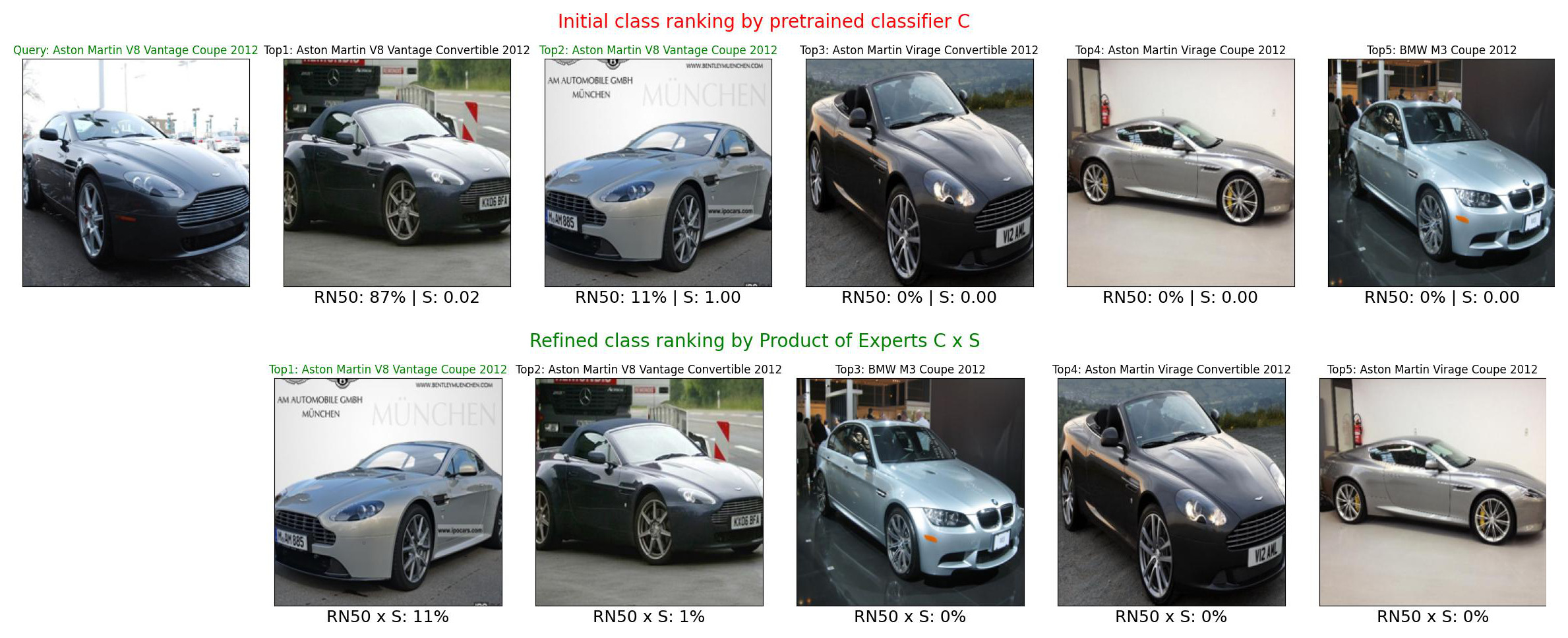

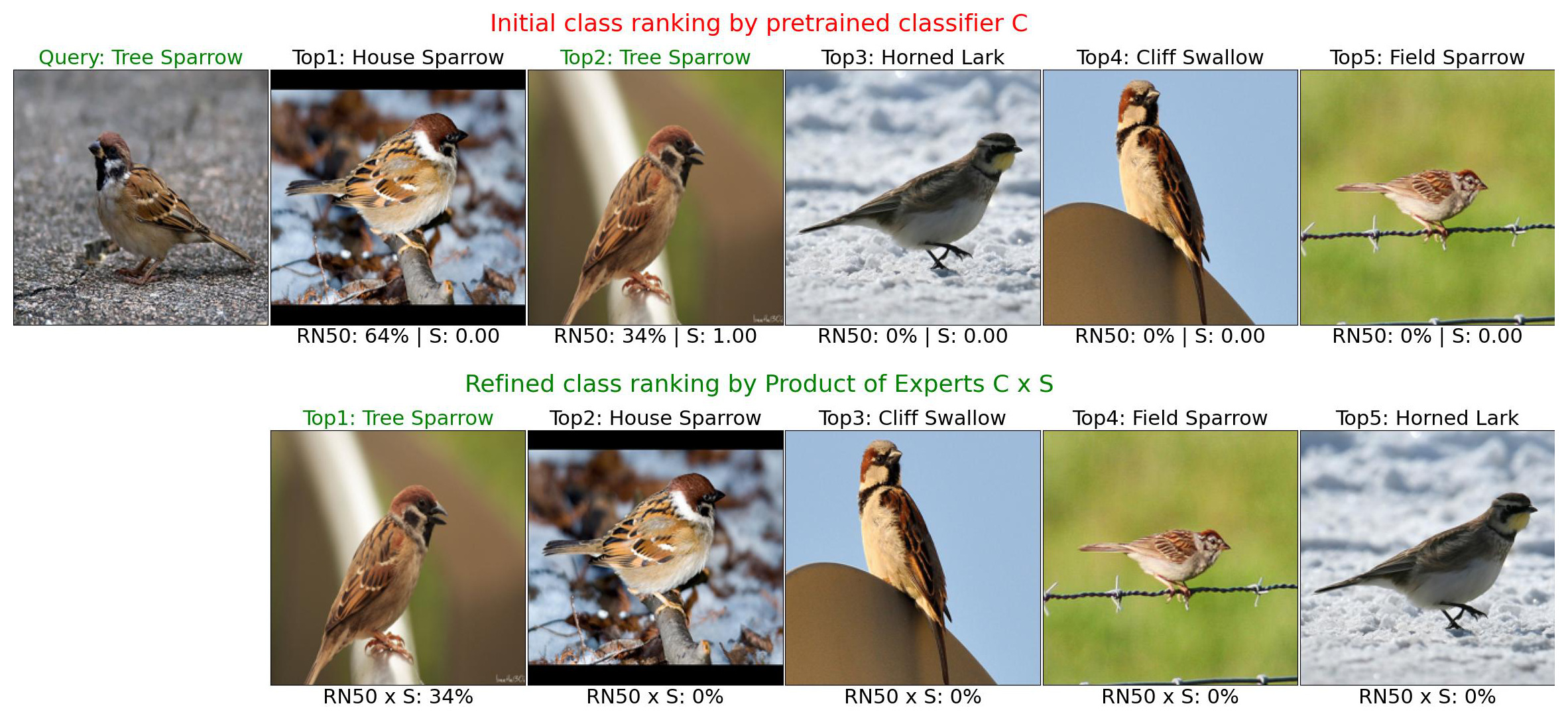

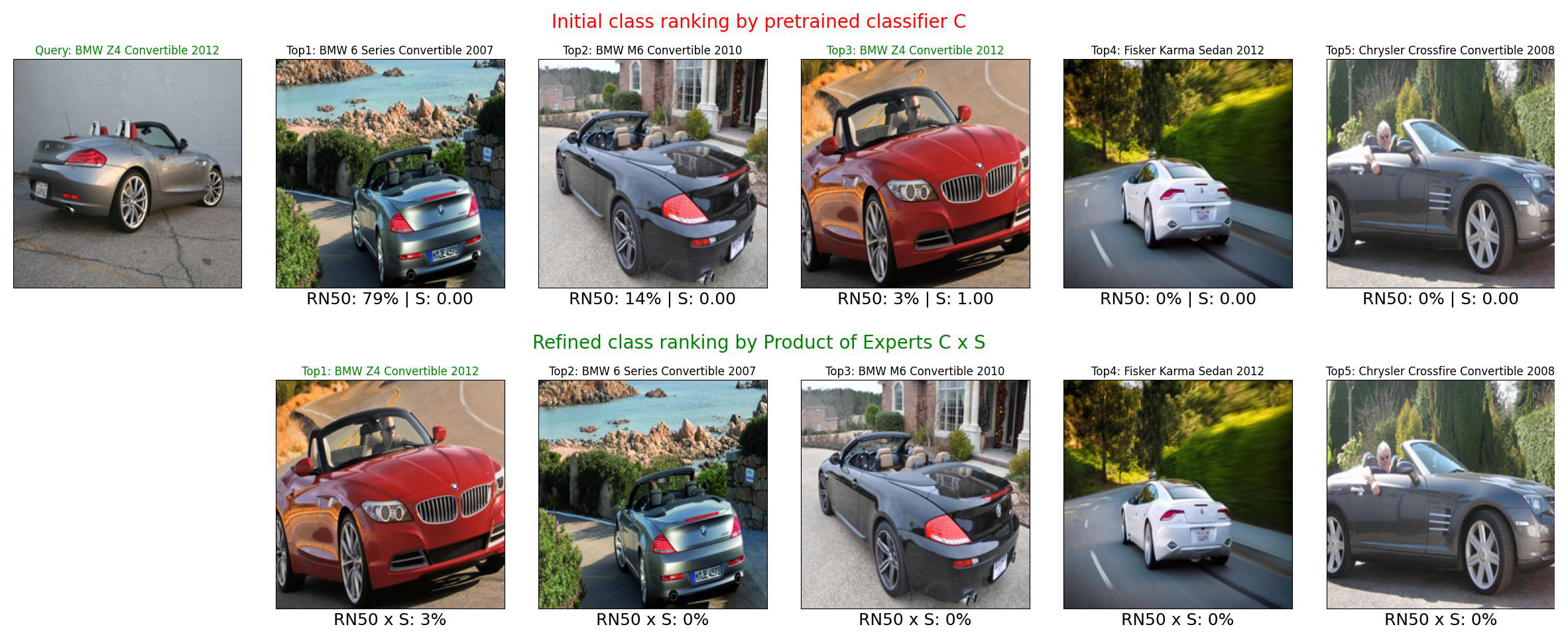

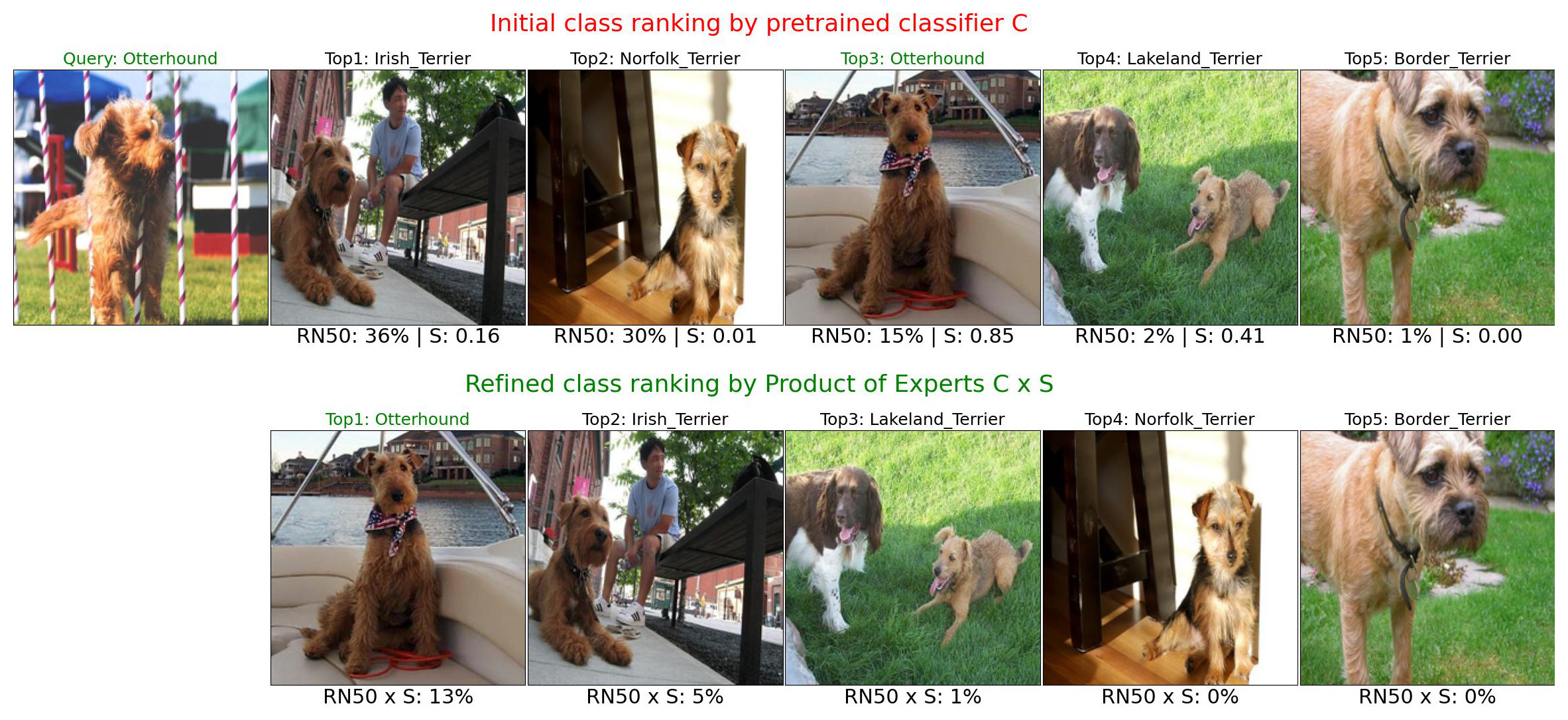

Product of Experts (Classifier × Comparator) re-ranking algorithm: In test, from each class among the top-predicted classes by the classifier \( \mathbf{C} \), we find the nearest neighbor \( nn \) to the query \( x \) and compute a sigmoid similarity score \( \mathbf{S}(x, nn) \), which weights the original \( \mathbf{C}(x) \) probabilities, re-ranking the labels.

Our re-ranking algorithm using \( \mathbf{S} \) significantly improves the top-1 classification accuracy over pretrained image classifiers on CUB-200, Cars-196, and Dogs-120.

| Dataset | Pre-trained | RN18 | RN18 × S | RN34 | RN34 × S | RN50 | RN50 × S |

|---|---|---|---|---|---|---|---|

| CUB-200 | iNaturalist | N/A | N/A | N/A | N/A | 85.83 | 88.59 ( +2.76 ) |

| ImageNet | 60.22 | 71.09 ( +10.87 ) | 62.81 | 74.59 ( +11.78 ) | 62.98 | 74.46 ( +11.48 ) | |

| Cars-196 | ImageNet | 86.17 | 88.27 ( +2.10 ) | 82.99 | 86.02 ( +3.03 ) | 89.73 | 91.06 ( +1.33 ) |

| Dogs-120 | ImageNet | 78.75 | 79.58 ( +0.83 ) | 82.58 | 83.62 ( +1.04 ) | 85.82 | 86.31 ( +0.49 ) |

.jpeg)

We conduct a human study and find that PCNN explanations help humans improve their decision-making accuracy over showing top-1-class nearest neighbors (in previous work).

Transparency in authorship is a cornerstone of academic integrity. In writing this disclosure, I aim to clearly attribute the contributions made by each author involved in this project. This practice is essential to ensure that credit is given where it is due, and it aligns with my strong stance against the practice of gifted authorship, where individuals are listed as authors without having made substantial contributions to the work.

The contributions of each author to this project are detailed as follows:

@article{

nguyen2024pcnn,

title={{PCNN}: Probable-Class Nearest-Neighbor Explanations Improve Fine-Grained Image Classification Accuracy for {AI}s and Humans},

author={Giang Nguyen and Valerie Chen and Mohammad Reza Taesiri and Anh Nguyen},

journal={Transactions on Machine Learning Research},

issn={2835-8856},

year={2024},

url={https://openreview.net/forum?id=OcFjqiJ98b},

note={}

}